Something new was in the air. It was July 1995, the hottest New York summer in a decade and a half, but heat wasn't making the headlines. Tech was. Within weeks, tech's 900 pound gorilla would conquer the Empire State Building, turning it red, yellow, and green to celebrate Windows 95's launch. Web browsers were the hot new thing—or so Netscape's PR team was telling everyone who would listen, ahead of their upcoming IPO. The frenzy of next half-decade would bring unprecedented tech riches, the ultimate triumph of the nerds.

But that was then, and this was now. Everything was just starting. Floppies were far more common than CDs. Cell phones made calls—just calls. The internet had only been fully privatized two months earlier. The goldmine was barely even discovered.

Up a narrow flight of stairs littered with the landlady's children's shoes, programmer Robert Morris' apartment felt closer to Silicon Valley than Wall Street. Companies are supposed to have offices and desks, pomp and circumstance—and a company is what Morris and his roommate Paul Graham wanted to start, something that would put their skills together and make their long academic careers finally pay off. But all they had was a bunch of computers and some top-notch brains.

"We don't know anything about business," mused the team, as Graham related later to Founders at Work1 author Jessica Livingston, "but we're good programmers. Maybe if we write a really good program, we'll make something all these users will want and we'll get lots of users and then some big company will buy us."

Ideas don't come fully formed. As Morris and Graham batted around potential programs they could build, one stuck out—perhaps inspired by living in the shadow of the Met, the Guggenheim, and dozens of other museums. The internet promised to "provide organizations with new means to do business," as Netscape's IPO prospectus said2. Why not apply that to museums? Photograph and catalog their artwork, put it online, and let anyone on earth wonder at the detail in a Picasso or Monet from the comfort of their homes. Thus, Atrix, a program to put museums online.

Museum curators had different ideas. "The problem was, art galleries didn't want to be online," said Graham. It would take over 16 years before Google would finally bring much of the western world's art and the museum experience to the web.

Atrix for museums wouldn't work, but the idea wouldn't die. "Maybe we should make something people actually did want," thought Graham. If museums didn't want to go online, who did?

Retailers. With inventory to be photographed, cataloged, and put online, stores were the obvious next choice. Amazon.com had just sold their first book 3 months earlier, after a year of being called first Cadabra, then Relentless.com3. Now was the time to put your store online—and the software to do it wasn't all that different from what it'd take to put a museum online. All you needed to add was a shopping cart.

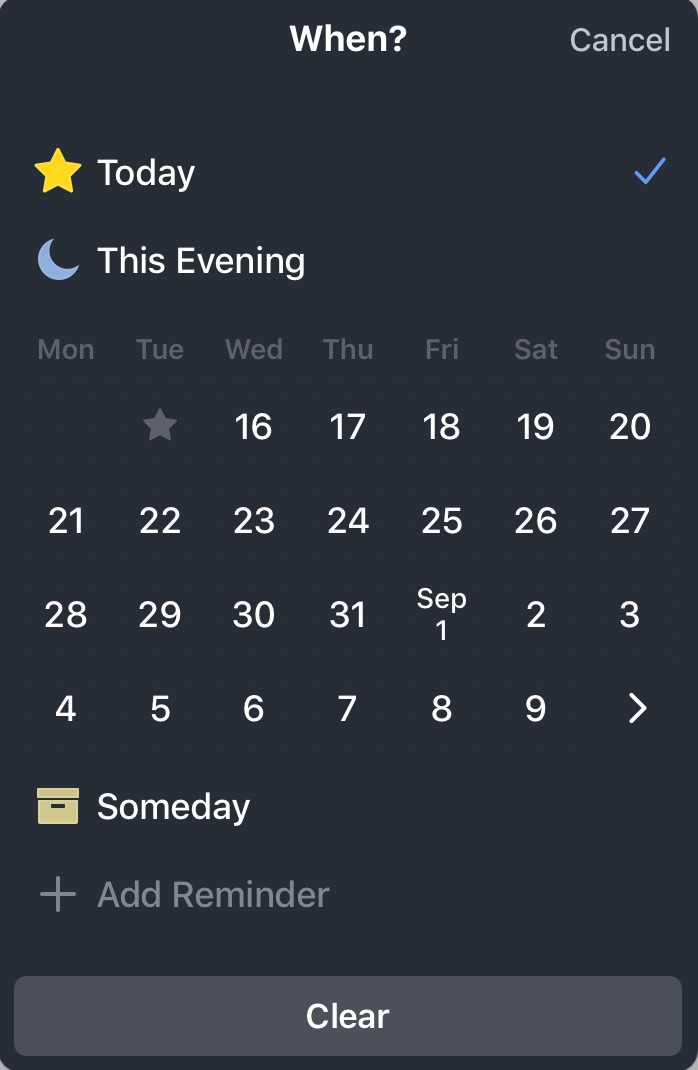

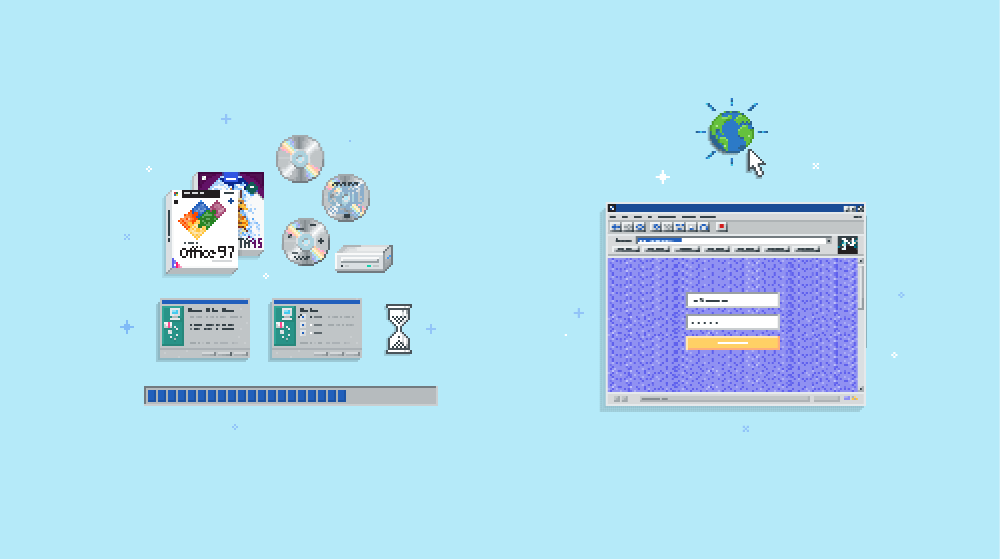

The store would live on the web, that much was certain. The question was how to make the software to build those store websites. You could code a Windows app and ship it in a shrink-wrapped box. That's how you bought Norton Utilities, Photoshop 3.0, and Microsoft Office—on a CD or an insane number of floppies (Office took 34). Even the internet came in a box, with tech publisher O'Reilly shipping a browser, an email app, and a book about the internet in a box4. Microsoft's own Internet Explorer was only available if you bought Microsoft Plus—in a box. The App Store of the day was literally a store.

Boxes and disks were the last thing Graham and Morris wanted. "The prospect of having to write desktop software was horrifying to us," said Graham. They'd spent their academic careers developing terminal software, with simple, logical code. Perfect for developers—not so much for the rest of us. Windows made software much easier for most people to use, and that much harder for developers to build. Where the simplest Hello, World! program would take 5 lines of code, it could take 150 lines of code to make the same program in Windows. As Graham said, "Neither of us knew how to write Windows software and we didn't want to learn."

Plus, even if you built online store builder software for Windows, customers would still have to find it in a store, buy it, install it on their PC, reboot, run the software, then figure out how to get their site online. It was already a stretch to get customers to purchase and install any software, much less convince them their business needed to go online. Any extra friction was too much.

Morris puzzled over it by day, Graham by night, coding when they preferred and emailing the latest code back and forth to pass the baton. Remote work, separated by an apartment wall and time.

Something clicked one hot July afternoon, waking Graham up after another 4AM coding session. "Hey, maybe we could make this run on the server and have the user control it by clicking on links on a web page," thought Graham. The software could run on the server, with a web page as the interface customers would use. "I sat up in bed, like the letter L, thinking, 'We have got to try this.'"

Thus, Viaweb. Instead of boxed software running on a Windows desktop, you'd make your store website online. Fill out some forms, upload a few photos, and voilà, the server's software would work its magic and build your online store. Without an FTP server or hosting server to figure out, you could get your business online in minutes.

Software without installing anything. Applications on the web. Store builders that worked on any browser, any computer, Windows and Macintosh alike.

Via web.

"Progress happens when all the factors that make for it are ready and then it is inevitable."- Henry Ford, The Business of America

Innovation's a funny thing. Someone discovers red phosphorous, another finds that a bit of heat turns it into white phosphorous, then one day another person puts one and one together and invents the match.

Congratulations, we say. You changed the world.

Truth be told, we're all building on what comes before. "Whenever there’s a major breakthrough, there’s usually others on the same path," observes Kirby Ferguson in Everything is a Remix5. "The most dramatic results can happen when ideas are combined."

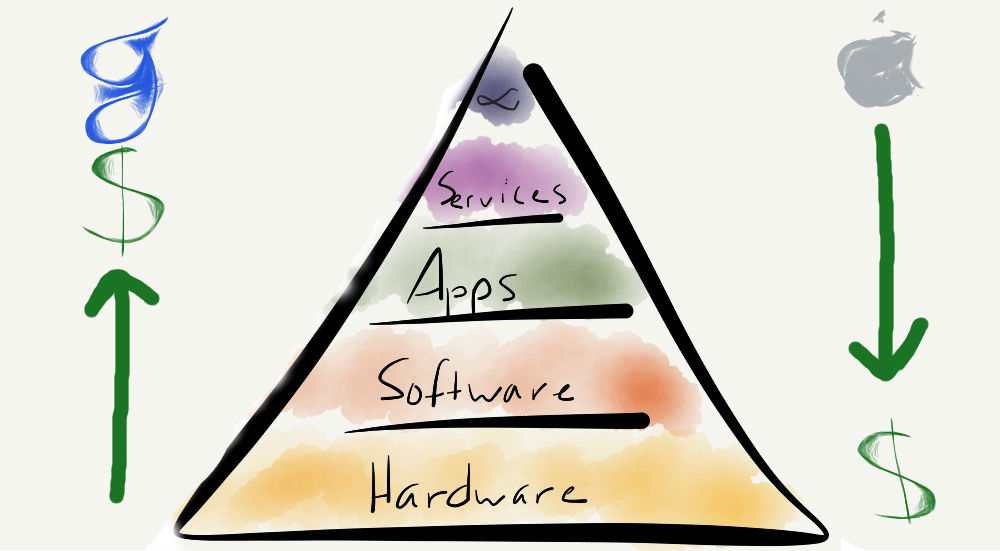

Computers brought software, which made more people want to use computers, which made us figure out how to share them—even over vast distances.

And in the early 1990s, computer technology was all coming together. Put PCs, software, and the web together, and you just might change the world.

"Most of the hackers we knew used this program called X Windows, where you could be using a program that was running on some remote machine, but it would be drawing stuff on your screen," said Graham. "All the brains were on the server."

"Could we just treat the browser like an xterm (a terminal that only ran X Windows), and have the application running on the server?"

That was the killer idea, the paradigm change of web-based software.

"A lot of people could have been having this idea at the same time, of course," Paul Graham wrote6 6 years later, "but as far as I know, Viaweb was the first Web-based application."

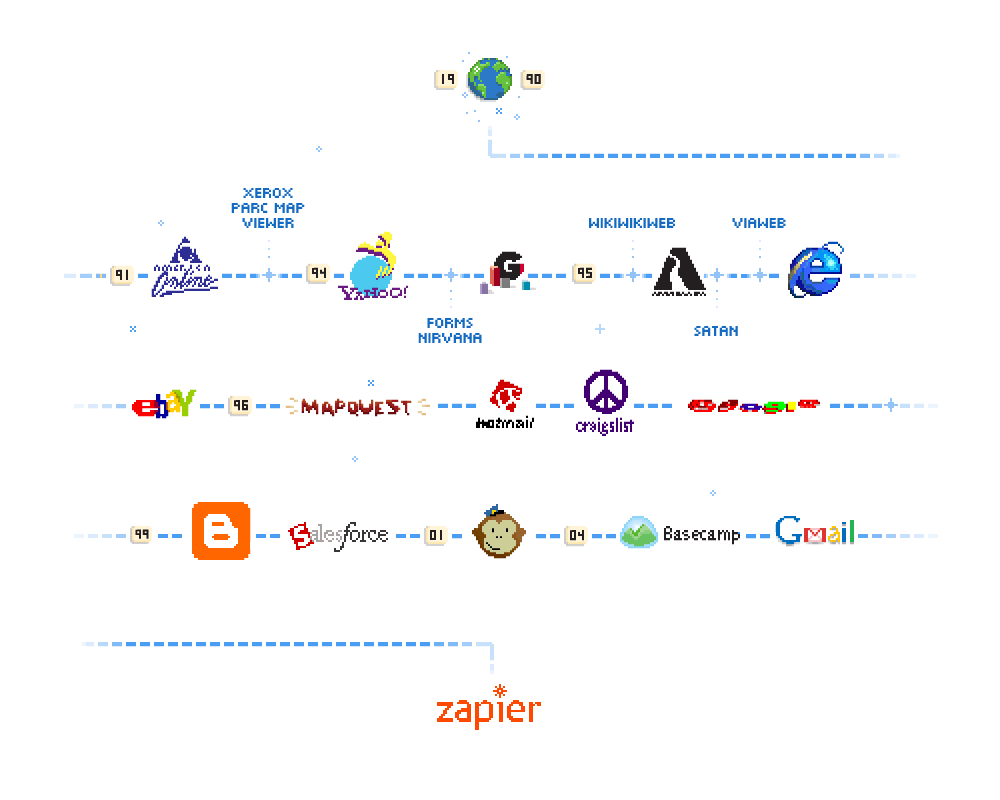

That piqued our curiosity. We take web apps for granted today. They're the reason Zapier exists, the way so many apps we rely on today work—from Amazon to Zoho, Gmail to WordPress. Were there prehistoric web apps that shaped the apps we use today, teams that beat Graham and Morris to the punch?

Where did it all start? For months we researched. A Hacker News thread7 here, a Slashdot comment8 there, and countless hours digging through old books about the internet and the Wayback Machine's invaluable snapshots of early websites started to paint a picture of the crucible of innovation that was the early world wide web.

There were a thousand flowers blooming on the web, it seemed—and five of the earliest web apps, including Viaweb, had lived to tell the tale.

The Path to the World Wide Web, the Information Mine

An ocean away and a half-dozen years earlier at the research institution best known today for the Large Hadron Collider, CERN's international group of researchers in Switzerland often felt like they were accelerating confusion instead of research. A German physicist would collaborate with a French chemist while referencing the work of Dutch and American researchers—and leave more research documents of their own.

"If we're sitting around a table, I'll start a sentence and you might help me finish it, and that's the way we all brainstorm," then-CERN fellow Tim Berners-Lee told author Walter Isaacson9 years later. "How can we do that when we are separated?"

Computers so far had not proved that helpful. The first computers were advanced calculators, built to solve math problems like calculating the flight paths of rockets—or bombs. Soon enough, though, they became office tools, devices for calculating data, writing it up in reports, and emailing it to colleagues. They were far too valuable to only let one person use them at a time. You'd share them instead. Dozens of terminals could use the main computer at the same time, each with their own monitor and keyboard. It was like having your own personal computer—except the real power was in another room behind lock and key.

Slowly, the terminals moved further away. Instead of your monitor and keyboard being in a different room of the same building, you could have one in your school or home and connect to the real computer via the telephone network. It was on computers like these that Bill Gates cut his coding teeth, sharing a remote computer from a terminal in his school.

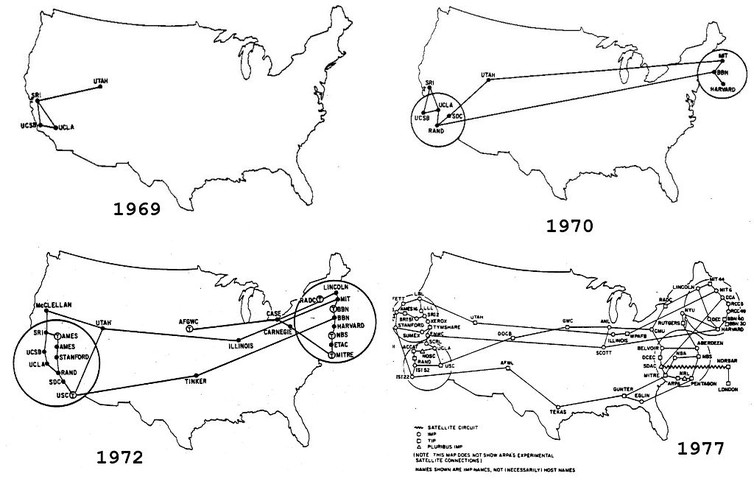

Connecting the computers themselves was the next leap. Instead of connecting only to a nearby university's computer from your terminal, the earliest internet—or ARPANET as it was called—let you access files and software on any other connected computer. A month after the first man stepped on the moon, the University of California, LA and Stanford Research Institute's computers were connected as the original ARPANET nodes—with the University of Utah and UC Santa Barbara to follow before the end of the year. The next year brought the East Coast's computers at MIT and the US government online, with a satellite connection to Norway finally making ARPANET international in 1973.

Suddenly it was possible to send an email message from Norway to Utah or run the early computer game Spacewar from MIT's computer in Boston, Massachusetts while sitting in Santa Barbara, California. Before long, you didn't even have to run the program remotely. Starting in the late 1970s, microcomputers—what we now call personal computers or PCs—made it cheap enough to have your own computer on your own desk, crunching the numbers right at home without having to wait for time on a room-sized computer.

Yet, twenty years after ARPANET connected the first computers, things were messier than ever. Sure, you could now access files on other computers and email questions to researchers around the globe. But the internal confusion Berners-Lee's CERN team found was only compounded on the internet. Documents were there for the taking—if only you knew where to look on the more than 60,000 connected computers. The internet itself was just a network, a way to connect computers. You had to find the stuff you wanted yourself.

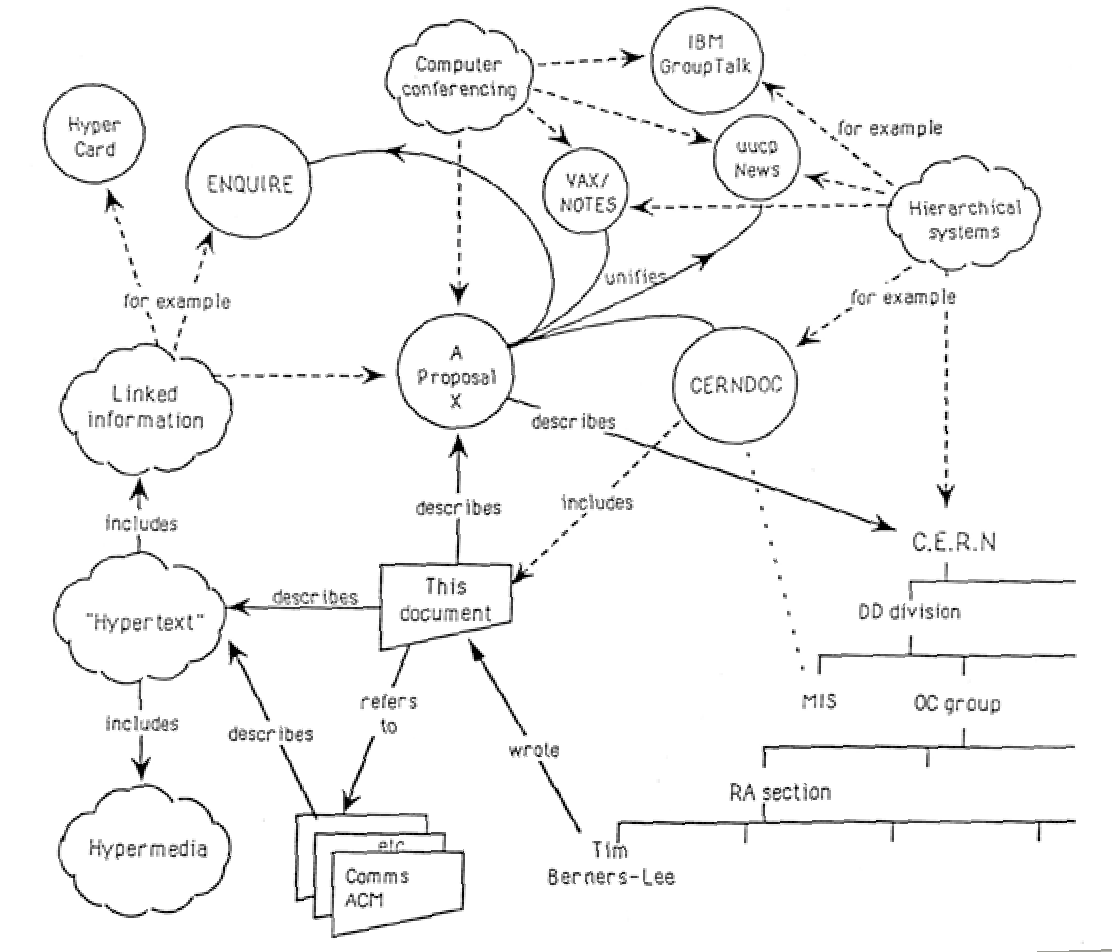

So in 1989, Tim Berners-Lee decided to add a notebook to the internet. "A 'web' of notes with links between them is far more useful than a fixed hierarchical system," wrote Berners-Lee10 in his original proposal.

On the web, every page was supposed to be an app, or at least an interactive piece of digital paper filled with what Berners-Lee called hypertext (web pages even today are formatted in HTML—or HyperText Markup Language). You could include words and paragraphs in your page on the web—along with hyperlinks that connected words to other pages. Anyone could add those links or change anything else on the page, in the web's original inception, much like you could mark up a paper book. "Computers could become much more powerful if they could be programmed to link otherwise unconnected information," though Berners-Lee. In the original vision, there were no pictures, no animations. The entire dream was around connections.

You could write a document, then I could write my own take on the topic and link to your document. A colleague could notice similarities and edit both of our documents with links to the others. The web as Berners-Lee imagined was the original online notebook or word processor app.

It wasn't even that smart of an app. "There have always been things which people are good at, and things computers have been good at, and little overlap between the two," Berners-Lee later wrote. People were good at writing, finding connections between abstract ideas, and mashing them up to make something new. Computers were good at storing massive sets of data and organizing them logically, perhaps alphabetically or by date written.

Put humans and computers working together, you might have something special. That special thing is what Berners-Lee called it the World Wide Web—with a system of website addresses to locate documents, HTML markup to format documents, and a browser to view and edit them.

It quickly became just the web—and before long, it was the only internet we remembered existed. The web where Graham and Morris–and a few other pioneering coders–built their web app.

Let There be Multimedia: The Web Gets Its First Interactive Online Maps

While researchers at CERN were probing the particles that make up the universe, Xerox's legendary research team at their PARC laboratory were dreaming up the future. It was here the laser printer was invented, along with ethernet, unicode, and eInk screens. One of the first personal computers, the Xerox Alto, debuted the original computer desktop with overlapping windows and a mouse—the computer that inspired Steve Jobs to build the Macintosh.

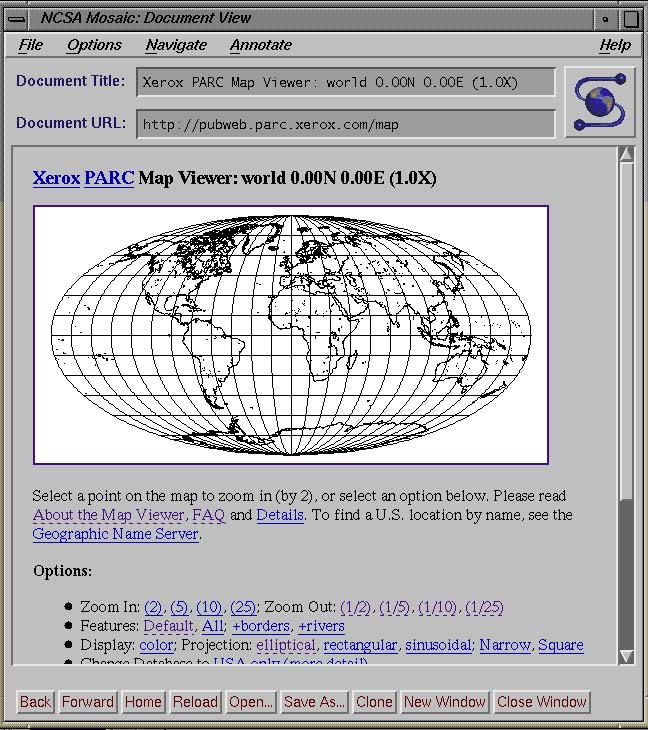

And in 1993, that's where researcher Steve Putz built the first online maps app, "a custom map rendering service—yet another experiment in providing dynamic information retrieval via World Wide Web," wrote Putz11 when he shared his invention with the world on June 21st. It was, perhaps, the actual first web app, if an interactive tool is enough to count.

The world wide web wasn't supposed to be this fun. Berners-Lee imagined the internet as a place to collaborate around text, somewhere to share research data and thesis papers—with tools to edit and author those documents, not just view them. The first popular web browser, Netscape, turned that around, adding images to CERN's text-only document format—and dropping the Edit button. Personal computers brought the rest of us online—and we were happy enough without editing web pages.

The web became the latest book, magazine, and museum rolled into one—written and curated in thousands of hypertext documents. Goodbye Encarta CDs, hello Wikipedia.

The genie was out of the bottle, and the PARC team set out to see how far they could push it. Using the web's newfound love of images, Xerox PARC Map Viewer showed a large map of the earth right on a web page. You could click anywhere on the map to zoom in—much like you would in modern map apps—or choose from map projection styles to see the planet the way you wanted.

It was all a bit of a trick—but the same trick Google Maps uses today. Instead of downloading the full map, it divided the map up into small images and stitched them together to look like a full map. Those tiny images were each linked to a zoomed-in view of that same area of the map—which is what would load when you clicked on the map. It was "yet another experiment in providing dynamic information retrieval via World Wide Web," as Putz said.

"The HTML format and the HTTP protocol are used like a user interface tool kit to provide not only document retrieval but a complete custom user interface specialized for the application," explained Putz12. Users weren't creating anything new, but they were doing far more than Berners-Lee's plain text pages offered, enough that textbooks a half-decade later still recommended Map Server as the tool student should use to find locations online.

Work on the Web: The First Online Form

PARC wasn't the only team pushing HTML pages to do more. 2,000 miles away and a year later at the University of Minnesota, the team that built Gopher—a protocol for organizing files as an alternative to Berners-Lee's World Wide Web—were trying to find their place in the world now that the Web was becoming the way most people used the internet.

The web was everywhere. "I saw a URL on the side of a bus," Gopher development leader Marc McCahill13 later said. "I knew Gopher would start winding down."

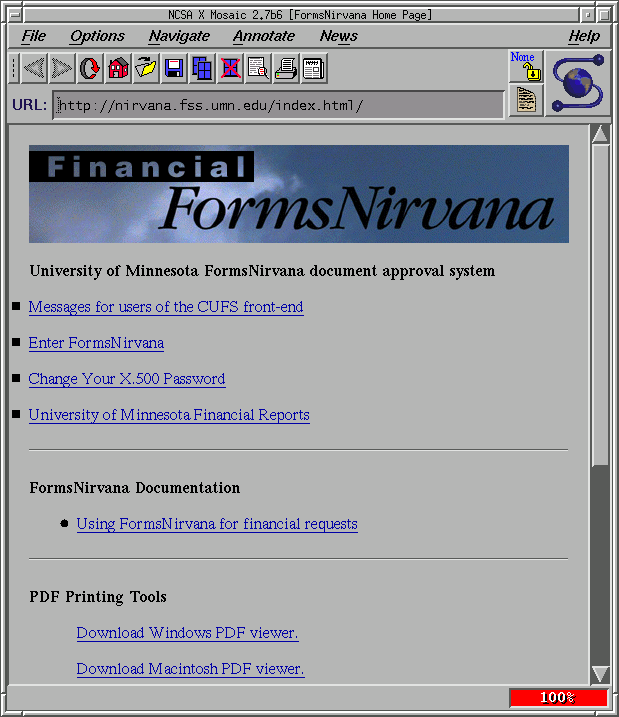

His team needed something new to do—and to them, building a web app was the obvious way to jump in. So they built an accounting app, perhaps the web's first custom form app. Forms Nirvana, they called it.

"The university always has big problems," said McCahill, among them an accounting scandal where a surgeon was indicted for fraud and embezzlement (and later acquitted) and the National Institute of Health threatened the university's funding—with the financial issues largely stemming from messy paperwork and inconsistent record keeping. Surely the web could help. McCahill pulled his Gopher team together, and off they set to HTML the problem away. "And because we were being ironic, we said, ‘Oh this will be Nirvana if we can get the purchase orders on-line and get them approved,’" reminisced McCahill14 years later.

And, they did it with one of the first online forms. "We did a document routing and approval system for online documents," said McCahill, launching in September 199415. University faculty could enter grant info and purchase order details online—and the Forms Nirvana web app would store that data in the university's database.

"Your financial documents get processed and into the ledger right away, as opposed to being key punched in after two weeks," said McCahill. Not only did the web shrink distances, it shrunk time too, apparently. And Forms Nirvana worked so well, the university relied on that early web app for the next 24 years.

Shouldn't Everything be Quicker? Editing Comes to the Web

The web's simplicity made it accessible, democratic even. The early adopters had built their early sites and pushed HTML's limits. Now was time for the masses. By the end of 1994, sites like Tripod and GeoCities—then called Beverly Hills Internet16—let people build their own pages and publish them online, for free. But still, Tim Berners-Lee vision of an editable web was little more than a dream.

"Sometimes innovation involves recovering what has been lost," wrote Walter Isaacson in The Innovators9. For the web, that came on March 25, 1995 with yet another early web app.

Universities and research centers weren't the only ones with more data than they could manage. Ward Cunningham found the same issues at testing device manufacturer Tektronix, where he spent his days coding operating systems for microcomputers, the type that ran machines and kept industry humming. And by night, he hacked together ways to store information better, something to make it easier to find the data his team needed among everything Tektronix staff wrote down.

The core problem was links. On standard web pages, you had to research the best links to share on your page—something Tim Berners-Lee thought others could add later by editing your page. But what if you could make links to pages that might or might not exist, and let the software figure out the rest? And what if you brought back the edit button to the web at the same time? Perhaps web software could be a bit smarter than Berners-Lee planned.

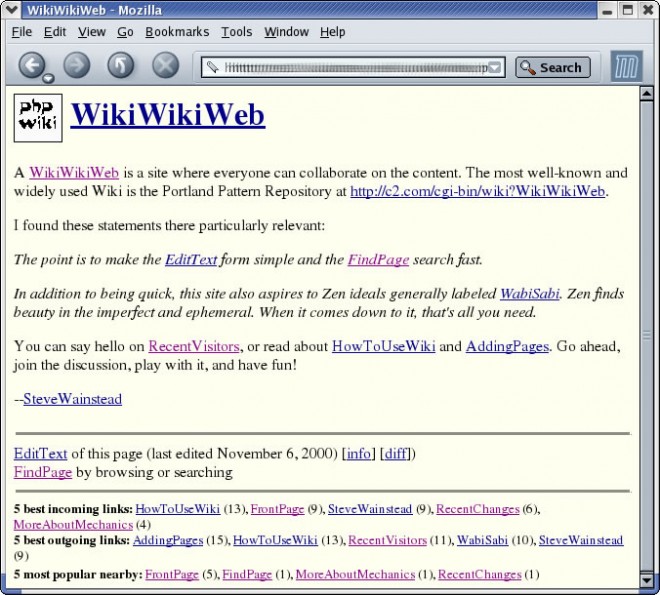

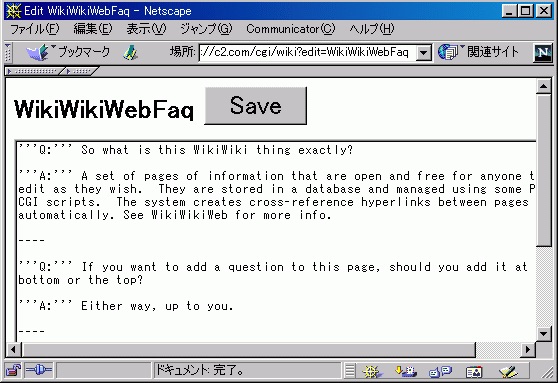

With Perl code on the server, a web form to enter and edit text, and HTML pages of copy, Ward Cunningham made the first editable webpage: WikiWikiWeb, from a Hawaiian word for quick.

"You're browsing a database with a program called WikiWikiWeb," wrote Cunningham17 in the site's introduction on launching it March 25th, 1995. "And the program has an attitude. The program wants everyone to be an author. So, the program slants in favor of authors at some inconvenience to readers."

Unlike other web pages, Cunningham's wiki had an edit page, one that finally fulfilled Berners-Lee's vision of the internet. Anyone could click Edit, modify the test, and save their changes. Moments later, the wiki software would produce a new HTML page and the site was changed for good. As you wrote, you'd add brackets around words that should be linked—names, especially—and the wiki would automatically add links. It was a bit of extra smarts to do what humans were too lazy to do, in a web app. And with that, the web came full circle.

The Devil in the Details

Networked computers were great—until they weren't. The web at the time was like the wild west. You don't link thousands of computers together without something going wrong, eventually.

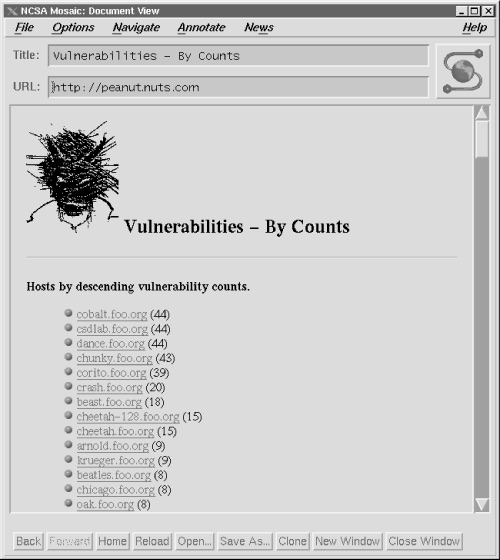

The internet already had security issues, where one could often view and even edit most files on a server, or perhaps even remotely run programs on the computer that would change passwords and delete data. The web's explosive popularity only made it worse. Thorny problems like these attract the brightest minds, including Dutch programmer Wietse Venema who'd already started writing security software. And in 1992 when American developer Dan Farmer was writing a research paper on network security, that's who he wanted as his co-author.

That is, if Farmer and Venema could manage the distance—easy today, not so much before the days of Gmail and Slack. "To my surprise, he was willing," said Farmer18, "and we struck up a long-lasting friendship." Remote work, right at the beginning of the web.

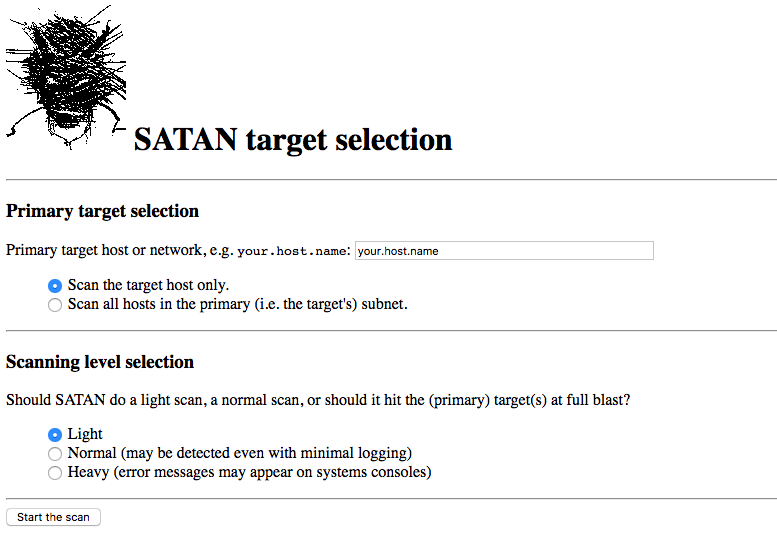

Over the next year they worked on the paper, collaborating over phone and email. "Wietse was the über technical guy," said Farmer, "and I was the idea guy who didn’t know enough to think my notions weren’t possible or not." So they brainstormed and theorized, then built SATAN—or Security Administrator Tool for Analyzing Networks—a "network gatling gun" in Farmer's words to analyze networks and see if they were right. Their finished paper, Improving the Security of Your Site by Breaking Into It19, mentioned their testing tool in passing—and it struck a nerve.

"There was still a 'don't ask, don't tell' sort of mentality about security and no one wanted to talk about it, let alone give up their secrets," said Farmer. "The paper started people talking about how they really wanted this program."

The old debate over how to develop software struck again. You could build a standard terminal app—it'd feel familiar to researchers, but not to the general public. Developing a more familiar Windows app would require learning a whole new set of skills.

Or you could just use the web's HTML as your app's interface—it'd be an easy way to show the same text as a terminal app, only in a much more user-friendly way.

"We first started simply creating reports that could be viewed by a browser," said Farmer, "but then discovered if you tweaked a web server the right way you could actually interact with your browser and make it a more pleasing user experience, with buttons you clicked that would execute programs and dynamic links that would be generated on the fly, with a database in the backend stashing all the data and configurations."

With the new web-powered, remotely-coded SATAN in hand, Farmer and Venema launched their network security tool on April 5, 1995. Instead of installing a new tool, you'd simply enter your own server name and let SATAN do its work online, testing your security and generating an HTML report on the fly.

It worked—too well, in fact. "It was so hyped up, many of the initial servers we had put up melted down on the 5th," remembered Farmer, "and I’d heard that many corporations closed down their company sites in order to ensure that they scanned their stuff before the Bad Gals did."

"It’s a bit quaint now, but at the time, bleeding edge."

Back in New York, Graham and Morris' July hackathon continued apace, gradually becoming a full web app that could build online catalogues, online. They built a website to showcase their product, and an account system for users to log in to their own store's site, much like the compatible time-sharing system's terminals with their separate user accounts and personal files.

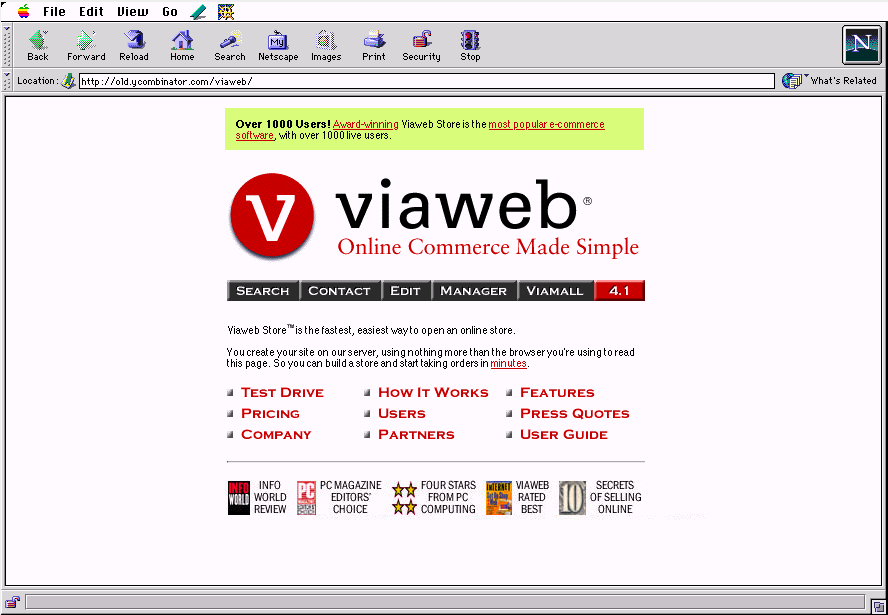

Computers were still expensive—at least the powerful ones that could run software for multiple users behind the scenes. The team bought a $3,000 server (fast for its day, with less than 1/25th of a modern smartphone's computing power), paid $350/month on their internet connection, and spent $5,000 for Netscape Commerce Server as "the only software that then supported secure http connections."20

Viaweb was taking shape. After weeks of coding, by August 12, 1995, they were ready to show it to the world21. Users could sign into their account, fill out an online form with their product info, choose from six layouts to organize their catalog, and select the font and button style they wanted. The web still didn't have JavaScript (something invented around the same time as Viaweb by Netscape developer Brendan Eich for building interactive websites) or a way to upload files through the browser. Instead, Viaweb users still needed to upload photos of their products via FTP—a longstanding internet protocol for accessing remote files. Another push of a button in Viaweb's website, and its server software would automatically create photo thumbnails, turn the text into button images, put everything together into HTML pages, and build a searchable database of the store's products.

Minutes and a Commit button click later, they had built a online store using online software.

For $100 a month22, anyone with a computer and a web browser could start an online store, from an app they never had to download and install. And it worked. Within three years, Viaweb's thousand customers—including NASA, Rolling Stone, and Cirque du Soleil—were selling collectively over $3.3 million per month.

All that time, "no one ever seemed to get that the software ran on the server," marveled Graham. "We would explain to people how the thing worked and give them a demo and they would say, 'Great. Where do I go to download it?'"

But no downloads were needed. Viaweb was a web app—or, "web-based software," as Viaweb's PR agency called it. It just took a web browser, and 10 minutes later you could have a store.

And a browser window away, you could zoom in and find your house on a map, submit an expense form, edit a wiki page about your hometown, and get SATAN to make sure your network was secure.

"Ten other people were probably working on similar things."- Paul Graham

Computers. Networks. Browsers. Inspiration. One thing led to another, and over the 26 months from September 1993 to August 1995, web apps became a thing. First an online map, then a forms tool, and then the wiki which brought us full circle to the original vision of the web. A bit more time, and a team figured out how to test server security online while working remotely, and another built an app that built stores.

All apps, in one form or another, doing more than what came before it until you couldn't quite tell which exactly was the first true web app.

And that's what we call history.

Yahoo! acquired Viaweb for $49 million in 199823—before themselves being acquired by Verizon this year24. Xerox shut down the Map Viewer in 200525. The University of Minnesota relied on Forms Nirvana to manage their grant system until 2008, spending $50 million to replace that early web experiment26. WikiWikiWeb got vandalized by hackers in late 2014, and lives on today as a read-only site27—something closer to the first web pages than the interactive web it brought. SATAN alone lives on for the ages28, itself scarcely relevant to today's security challenges.

Yet their spirit lives on. Today's web would scarcely be recognizable to someone opening Internet Explorer for the first time in 1995. It's an interactive, app-fueled place with social networks, streaming video, and more content than one could ever hope to consume. It's where we crunch numbers in sheets, edit documents collaboratively, connect our business software, and tweak Wikipedia whenever something feels off.

Of course there are apps on the web. It'd hardly seem right any other way.

History is messy—and it's easy for the details to get lost to time. As Paul Graham told us via email, "Ten other people were probably working on similar things." These are the app stories we found—but we're certain there were likely other web apps being built around the same time. We'd love to hear of any other early web apps you know of or inspirations that might have shaped today's web apps.